Spatialized Audio For Virtual Reality

Creating surround sound for traditional computer games and movies is relatively straight forward. It's a matter of positioning sound sources on a two-dimensional plane reproduced with an array of stationary speakers creating a multi-directional soundscape.

Spatialized audio for virtual reality depends on headphones or earbuds instead of speakers, disallowing natural spatial location of sound with your ears. Rather, head position and orientation are tracked in-real time simulating sound sources and emitters ahead and behind as well as above and below you, with integral cues for reflection, distance, and intensity.

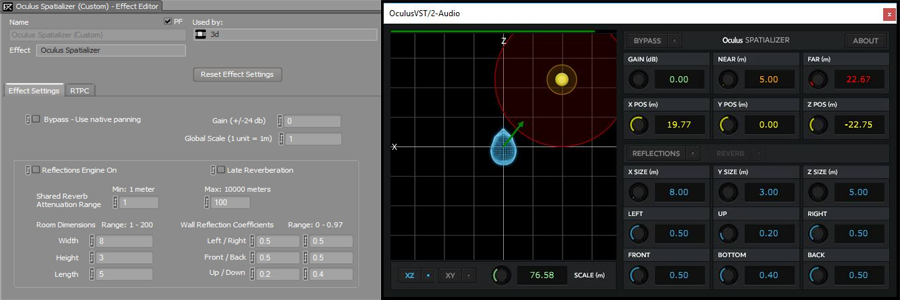

VR headset manufacturers provide SDK's for programmers to handle spatialized audio. In Unity, the Oculus Audio SDK exposes programmable elements of the Rift's audio system and head phones. If you're a VR developer, then you'll want to read through the documentation to learn about this API (application programmers interface) and how to use it. The above screencaps show GUI elements of the ancillary Spatializer app from Oculus.

Sounds emitted from a translating NPC (non-player character moving in space) are more expensive in terms of processing overhead than stationary audio emitters. This is because the NPC and the player (a person wearing a VR headset) could be moving simultaneously in different random directions, compounding source location and reverberation modeling, for example. A powerful PC configured for VR won't have much trouble rendering typically optimized, spatialized audio. To some extent, VR audio design is about employing rapidly evolving development platform interfaces, so make sure you have the latest builds of whatever IDE you're using, and the latest SDK's for your platform as well.

T.M.Wilcox, February, 2017